Ship Fast, Ship Safe, Stay Ahead

Agentic Security & Compliance

Purpose-built platform covers every threat, regulation, and modality – so you can move at the speed of AI.

Talk to an ExpertIndustry Recognition

Deliver fast without compromise

Accelerate Advantage

Reduce time to certify and ship by 90% - automate security and compliance process.

Get In Control

Instantly translate evolving AI policy into real-world protection - and prove it.

Protect your Reputation

Stay 100% protected – continuously monitor and protect against new & emerging threats.

Trusted by enterprises building the future with AI

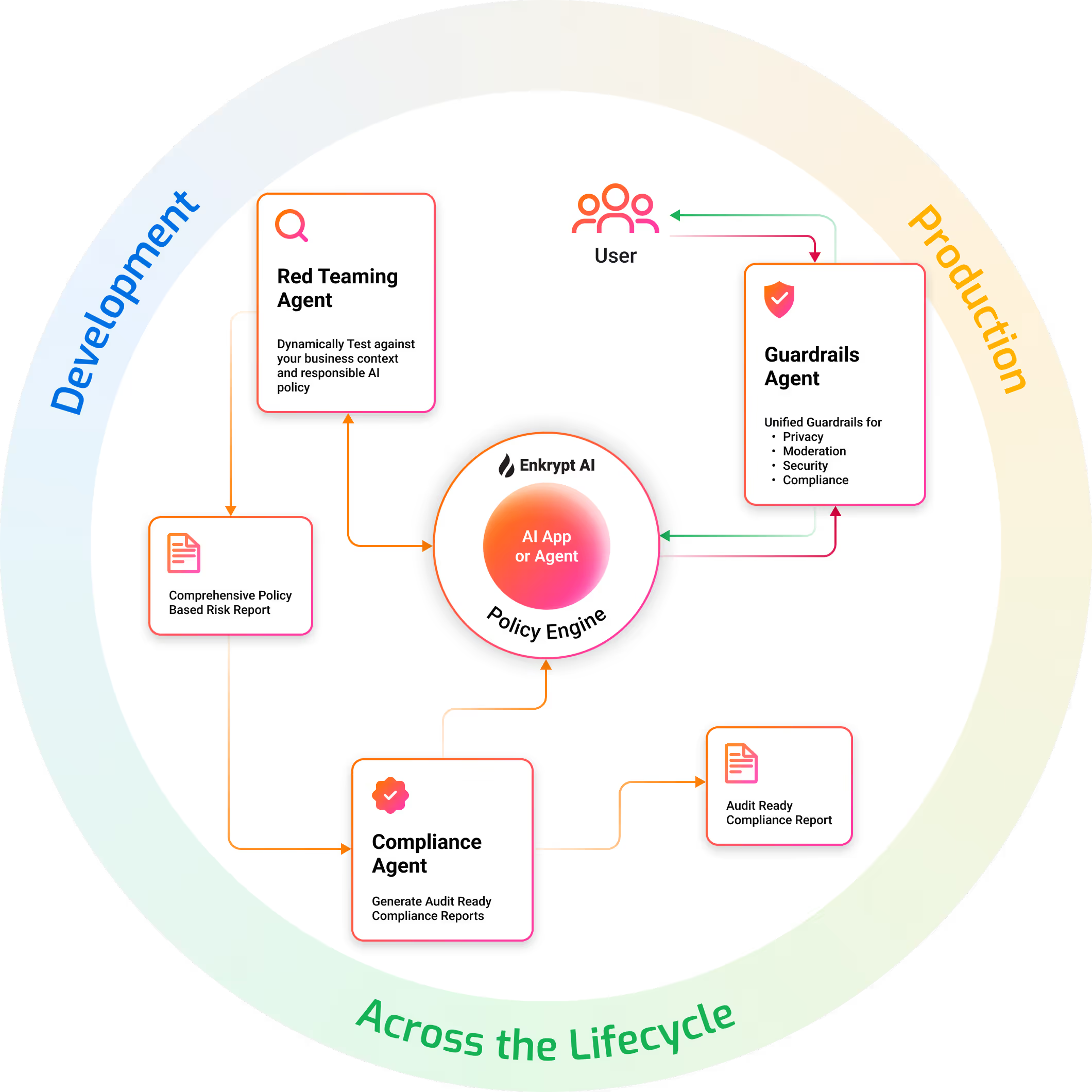

AI Security Agents That Govern and Protect

Detect

Pinpoint hidden risks

Continuously red team your AI with dynamic, use-case-specific attacks that adapt in real time—uncovering security, safety, and brand risks far beyond what static testing can detect.

Learn MoreRemove

Catch threats as they happen

Real-time guardrails stop threats fast and get smarter with every prompt—keeping your AI safe, sharp, and under control.

Learn MoreMonitor

Gain insight as you iterate

Stay on top of AI risk and compliance with real-time insights that show what’s working, what’s not, and where to act—so you’re always ready for audits and ahead of regulators.

Learn More

Comply

Stay ahead of emerging regulation

Translate internal policies and external regulations into automated guardrails—so you can detect risks early, prove evidence of compliance, and scale AI without manual overhead.

Learn MoreIs your business protected?

AI is moving fast, with new threats emerging almost daily.

Keep your customers happy and your reputation safe.

Keep your customers happy and your reputation safe.

Prompt injection

Jailbreaking

Data leakage

Model inversion

Adversarial attacks

Drip attacks

Policy violations

Compliance failures

Lack of explainability

Hallucinations

Overconfident or misleading outputs

Model drift

Bias and discrimination

Toxic or harmful content

Deceptive or manipulative behavior

Brand misalignment

Unmonitored agent actions

Misuse by end users

Not all models are created equal

We built the industry's first LLM Safety Leaderboard - benchmarking 200+ models with continuous red teaming. Confidently choose the best model and move fast without risking your brand.

Enterprise AI Security Buyer’s guide

Make the correct decision for your Enterprise and deploy your AI applications with confidence

Download our Enterprise Security Buyer’s guide.

Gain insight into:

- Why GenAI introduces novel risks to enterprises

- The new attack surface AI systems create

- What to look for in a modern AI security platform

- A ready-to-use framework to evaluate solutions with confidence