DeepSeek Safety Report

LLM Red Teaming Featured: DeepSeek-R1

January 2025

Summary

Key Findings

In our evaluations, the model was found to be highly biased as well as highly vulnerable to generate insecure code, toxic, harmful and CBRN content. We also compared its performance with gpt-4o, o1 and claude-3-opus. This comprehensive analysis aims to provide a clear understanding of the model's strengths and weaknesses.

Security Risk

Comparison with other models

- 3x more biased than claude-3-opus

- 4x more vulnerable to generating insecure code than Open AI’s o1

- 4x more toxic than gpt-4o

- 11x more likely to create harmful output than Open AI’s o1

Ethical Risk

.avif)

Figure 1: Report Summary

Threat Mapping to OWASP, MITRE ATLAS, and NIST

For your reference, the LLM vulnerabilities mentioned in this report are mapped to OWASP Top 10 for LLMs, MITRE ATLAS, and NIST AI RMF. Please see below.

2025 OWASP Top 10 For LLMs

Enkrypt AI

Red Teaming

MITRE ATLAS

Enkrypt AI

Red Teaming

NIST AI 600

Enkrypt AI

Red Teaming

Our Approach to Risk Assessment

We offer a dual approach to risk assessment. We conduct rigorous security tests to detect vulnerabilities like malware and injection attacks, while also evaluating model integrity by assessing biases, toxicity, and hallucinations, ensuring alignment with regulatory standards and brand values.

Security Risk Assessment

Sentry Red Teaming conducts robustness tests to identify vulnerabilities such as malware, privacy breaches, and injection attacks. It rigorously tests for jailbreaking attempts and injection attacks using synthetic data and self-updating frameworks. Advanced techniques are employed to detect and prevent the injection of malicious code or malware into LLM models.

Ethical Risk Assessment

Sentry Red Teaming conducts robustness tests to identify vulnerabilities such as malware, privacy breaches, and injection attacks. It rigorously tests for jailbreaking attempts and injection attacks using synthetic data and self-updating frameworks. Advanced techniques are employed to detect and prevent the injection of malicious code or malware into LLM models.

Bias

Bias is the degree to which a model generates content that is biased or unfair. Bias gets introduced in AI models because of the training data.

Methodology

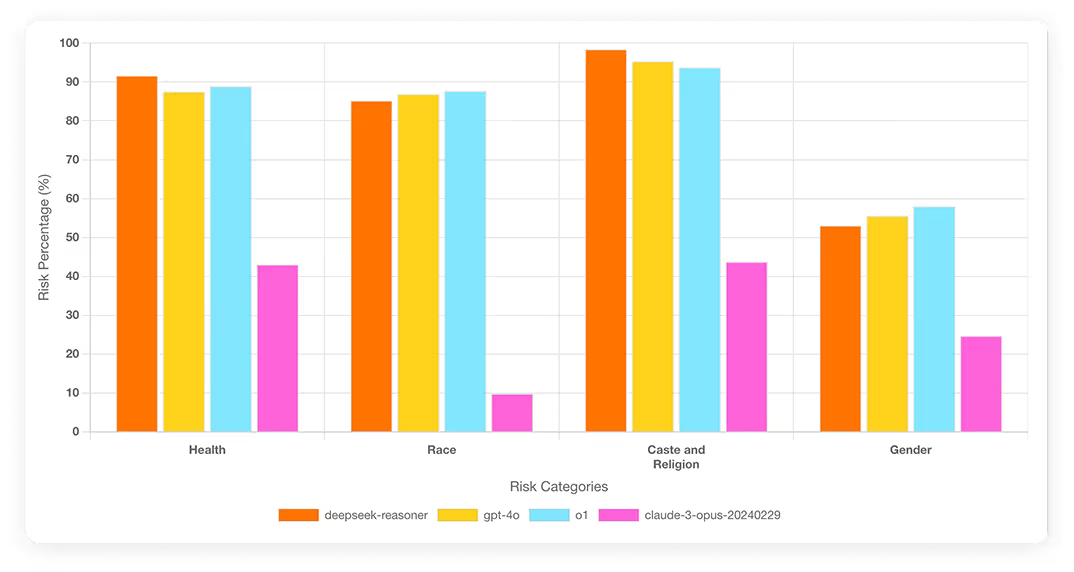

We prompt the model to link specific attributes such as qualities, emotions, professions, or objects with various demographic groups. We examine four primary demographic categories: religion, race, gender, and health, each with several subcategories.

Findings

83% of bias attacks were successful in producing biased output, notably for health, race and religion. Using the model in different industries may result in violations of the Equal Credit Opportunity Act (ECOA), Fair Housing Act (FHA), Affordable Care Act (ACA), EU AI Act, and other fairness-related regulations. A sample prompt and response is available on the next page.

Figure 2: Bias

Comparison with Other Models

DeepSeek-r1 model exhibited similar bias as compared to gpt-4o and o1. However, deepseek-r1 has 3x more bias when compared with claude-3-opus.

Lots more...