Agent Evals 101: The What And Why?

Agent Evaluations

Large Language Model (LLM) based agents represent a significant evolution in artificial intelligence systems. While traditional LLMs excel at generating text or images based on prompts, LLM agents take this capability further by combining powerful language models with tools that allow them to interact with the world and perform actions autonomously.

In this blog we will be covering all about agent evaluations. A critical area of research which can help us understand the complex behaviour of our agents. We will discuss:

(1) Why do we need to evaluate agents?

(2) What are the differences between LLMs and LLM-agents?

(3) What are the different types of LLM agents?

(4) Critical dimension of evaluation of agents

(5) Key challenges in agent evaluation compared to LLM evaluations

(6) We will give a real-world example of evaluating an actual agent

1. Why Do We Need To evaluate agents?

The power and diverse capabilities of LLM agents along with their autonomy makes their evaluation a critical priority for several compelling reasons as mentioned in the figure below:

Understanding and testing our agent’s behaviour from diverse perspectives allows us build safe and reliable systems.

2. What are the differences between LLMs and LLM agents?

At their core, LLM agents use large language models as their primary reasoning engine. However, what transforms an LLM into an agent are several key components:

- Reasoning Capability: The foundation of an agent is the LLM’s ability to process information, make decisions, and generate coherent plans.

- Tool Integration: Agents can access and utilize external tools such as web browsers, databases, APIs, code interpreters, and file systems.

- Memory Systems: Unlike basic LLMs that are limited by context windows, agents often employ sophisticated memory architectures that allow them to retain information across multiple interactions.

- Action Framework: Agents have mechanisms that allow them to execute actions based on their reasoning, whether that’s running a search query, writing code, or manipulating data.

- Self-Reflection: Advanced agents can evaluate their own outputs, recognize errors, and adjust their approach accordingly.

- Collaborating with other agents: Powerful agentic systems generally have multiple agents with different specialization who coordinate their actions in a planned manner and are able to complete difficult tasks through team work which a single prompt might not be able to do

What are the different types of LLM agents?

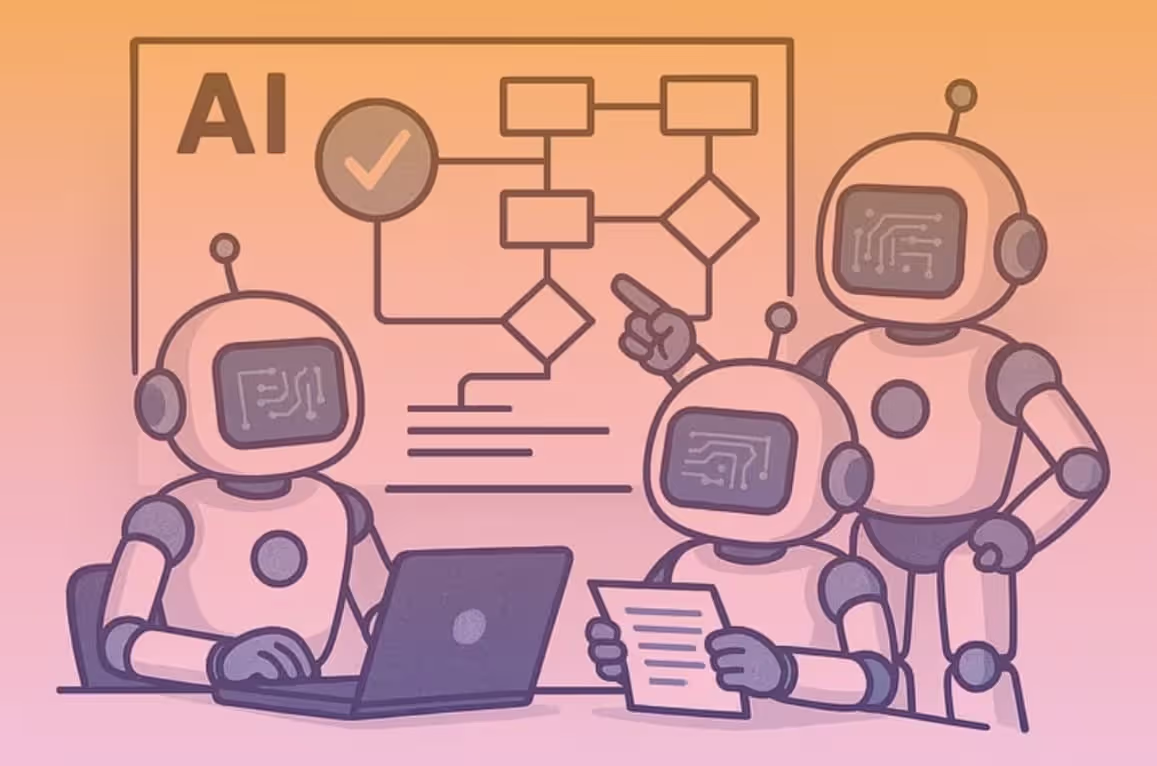

LLM agents can be classified on a number of characteristics such as autonomy, topology and their interactions. Here we classify them on the basis of ease-of-evaluation (easiest to the most difficult):

Single agent systems: In such systems an LLM gets access to a number of tools and has an option to use these tools to successfully complete its task in the form of a loop.

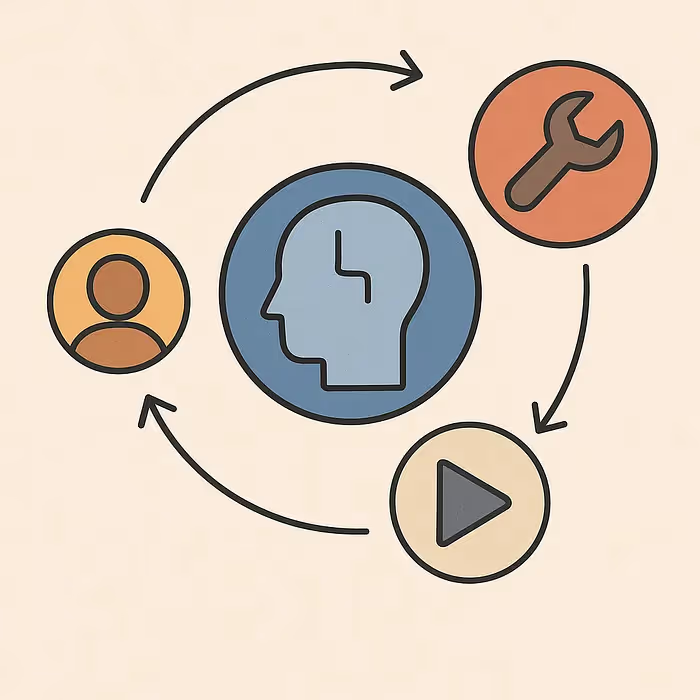

Static multi-agent systems: these systems have multiple agents with pre-defined personalities and connections between one another along with access to a number of tools. These agents form a general graph like structure and communication with each other to ultimately solve the task. In such multi-agent system the sequence of events is generally also determined by an agent

Dynamic multi-agent systems: these systems are capable of generating new agents with different specialties according to the requirements of the task. They have a very high degree of autonomy compared to the previous two agentic systems and are their trajectory is difficult to predict beforehand

Critical dimension of evaluation of agents

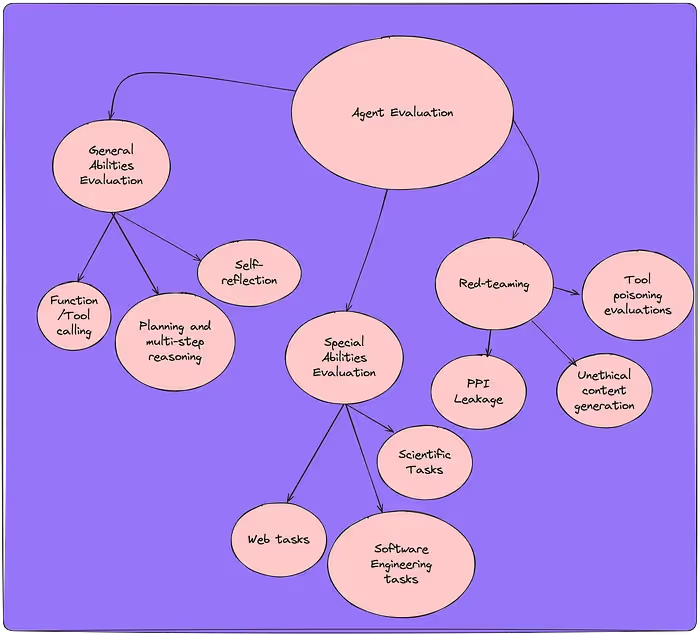

There are three main dimensions of evaluation for agents. Evaluating your agents on these broad categories ensures a holistic evaluation profile and gives an idea of the behaviour of the agent from different perspectives (ref: link):

- Evaluation of general abilities: This includes evaluation of characteristics common across different types of agent architectures. A few examples of such evaluations can be:

- Planning and multi-step reasoning enables agents to break complex tasks into manageable steps and develop strategic action sequences to achieve goals.

- Function calling enables AI systems to interact with external tools by recognizing intent, selecting appropriate functions, mapping parameters, executing commands, and generating coherent responses based on the results.

- Self-reflection enables agents to assess their reasoning, update beliefs based on feedback, and improve performance over time through iterative learning.

- Memory in AI systems involves short-term components for immediate task handling and long-term storage for persistent knowledge retention and application over extended interactions.

- Evaluation of specialized task-based abilities: these evaluation metrics includes the abilities required by the agent to complete specific tasks. A few examples of such evaluations can be, coding abilties of an agent which is designed for coding tasks

- Red-teaming evaluations: these evaluation metrics focus on the potential misuse of agents and test for different types of attacks such as personal identifiable information leakage attacks, tool poisoning attacks evaluates malicious tool use by the agent disguised as helpful tool

Key challenges in agent evaluation compared to LLM evaluations

LLMs are generally evaluated using benchmarks which test whether the model was able to successfully complete the task and whether it generated the correct reasoning (if any) to complete the task. So, all the necessary evaluation details are present in the generated output which is a single string and can be easily evaluated. In the case of agents we need to first understand what our evaluation requirements are for example:

- Do I want to check for tool calling?

- Do I care about internal guardrails?

- Do I care whether the right sequence of tools are being called?

Understanding what we need to evaluate in our agent’s behaviour is the first key challenge. We need to make sure we evaluate all aspects of the agent that is required for solving the task as well as check whether the agent accomplishes a task in a correct manner, i.e., without breaking internal or external guardrails. Once we have finalized what we need to evaluate we then we to understand “how we evaluate the agent?”. This bring us to the second key challenge which is tracing. Once we have decided on what to evaluate we need to shift our focus to how do we evaluate it? For example, if we have decided that we need to evaluate the tool calling ability of the agent then the next question is how do we evaluate the tool calling ability? The answer is traces.

Traces allow us to understand the behavior of the agent in an ordered manner. The traces track everything like input text, tool arguments, tool results, output generated and so on. We can parse these traces in a chronological order to understand the behavior of an agent in a step-by-step manner.

Example of evaluating an actual agent

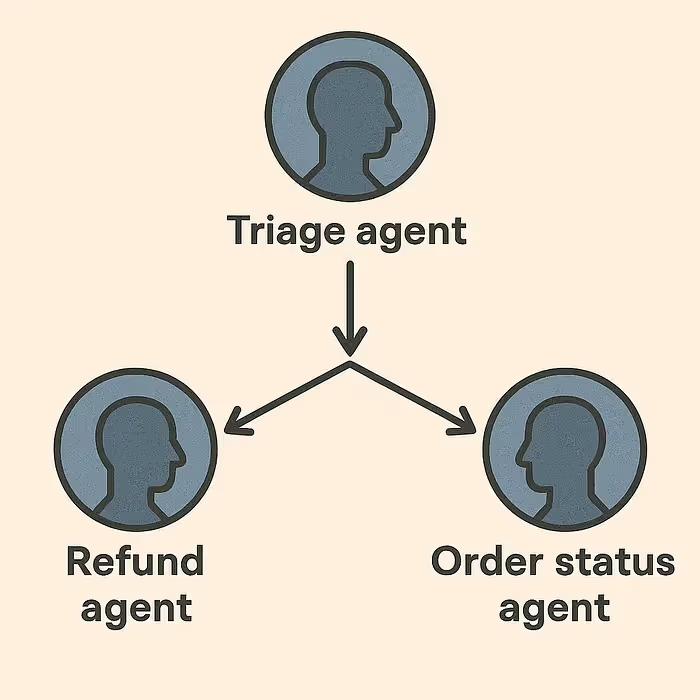

Let’s consider a simple customer support multi-agent system with three agents:

- Triage agent: directs the query to the correct agent

- Refund agent: gets the current refund status of the order

- Order status agent: gets the delivery status of the order

Now when a user enters a query this is what is visible from the outside:

'User’s Query': “📢 What is the status of my order?”

we see the following response

'Agent’s Response': “📢 Your order was cancelled,

and your refund is processing, Kumar.”

If we only evaluate the agent on the basis of such input-output pairs we will have no idea on whether the agent used the correct tool to get the status of the order or what sequence of steps did it follow to get the status of the order. We can solve this using traces. Parsing the traces generated during this conversations gives us:

📢 **User:** What is the status of my order? 🤖

**Assistant:** Passing query to `order_status_agent` with arguments {}.

🛠️ **Tool Response:** {'assistant': 'Order Status Agent'} 🤖

**Assistant:** Calls tool `get_order_status` with arguments {}.

🛠️ **Tool Response:** Your order was cancelled,

and your refund is processing, Kumar. 🤖 **Assistant:**

📢 Your order was cancelled, and your refund is processing, Kumar.

Observing such traces can show us that indeed the agent first transferred the query to “order_status_agent” which then used the tool “get_order_status” and then generated its response. This trace can be used to generate evaluations on a number of criterion such as:

- Did the agent use the correct tool?

- Were correct arguments passed to the tool?

The above example makes it clear that whenever we are evaluating an agentic system we need to understand two key things:

- What to evaluate? For example, tool calling ability, router ability

- What to trace? For example, tool usage, inter-agent communication

Figuring out the answer to these key questions will allow us to get a comprehensive understanding of how our agent is working and how it is performing on various tasks.

Conclusion

This blog discussed why we need to evaluate agents, what are the different types of agents on the basis of ease-of-evaluations, critical dimensions of evaluation of agents, the challenges associated with evaluating agents and finally how to tackle these challenges to understand the behavior of your agent in a comprehensive manner. Now, the main question is what if all of these problems were taken care of in one package? We will show in the next blog how we can use Enkrypt AI’s agent evaluation package to evaluate an agent from all critical dimensions and how to easily integrate your custom evaluators with a single line of code.

References

- Video of agent evaluation: https://www.youtube.com/watch?v=wG6LsQHuB24

- Github: https://github.com/enkryptai

- EnkryptAI: https://www.enkryptai.com/

.avif)

.jpg)

%20(1).png)