MCP: The Protocol That’s Quietly Revolutionizing AI Integration

Picture this: every time you picked up a new charger, you had to crack open your wall outlet and rewire the whole thing. Sounds exhausting, right? Well, that’s pretty much the kind of headache AI developers have been dealing with for the last few years. Crafting advanced AI assistants — ones that can handle tasks like checking calendars, accessing files, or sending off messages — has meant diving into clunky, one-off integrations for each individual tool, over and over again.

While large language models (LLMs) like GPT, Claude, and their peers have seriously leveled up when it comes to writing, reasoning, and offering helpful responses, they’ve mostly been boxed in. Imagine them behind a pane of digital glass — they can’t peer into your files, they don’t catch what’s happening in your Slack, and they’re certainly not going to take action for you unless someone does some heavy backend lifting.

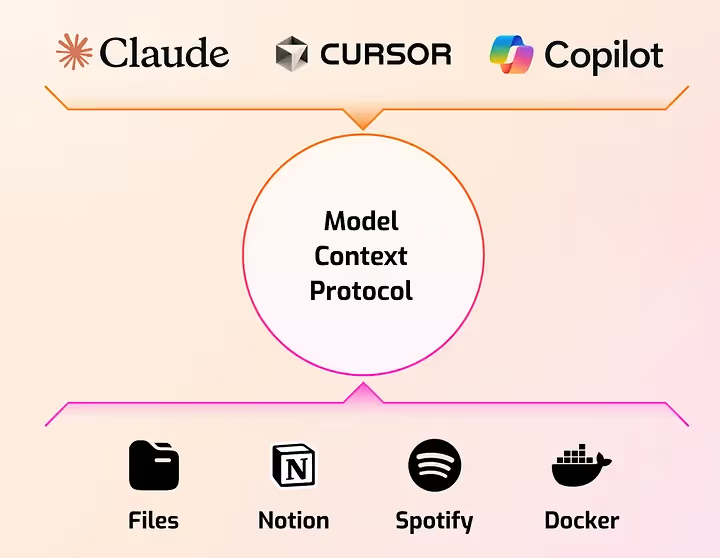

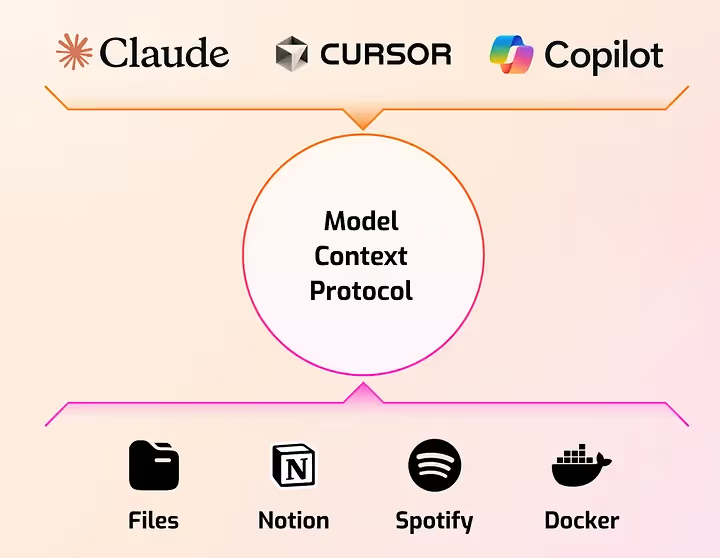

That’s where the Model Context Protocol (MCP) comes into play. It’s an open standard that’s quietly turning into a major breakthrough in how AI tools link up with the software we use every day. Created by Anthropic (yep, the folks behind Claude) and now gaining serious traction across the AI world, MCP is shaping up to be the one-size-fits-all connector that bridges language models with the real-world stuff they need to interact with. Whether you’re building cutting-edge AI apps or just want to understand how the next-gen assistants are being wired to actually do things, MCP is absolutely worth your attention.

The Problem: LLMs Are Smart but Disconnected

LLMs are undeniably powerful, but they’ve always had one major shortcoming: they’re great with the info you hand them, but they can’t go out and grab things on their own. No access to tools, no poking around in files, no pulling in live data. Up until not long ago, if you wanted an AI assistant that could, say, peek into your calendar, pull details from your database, or open a file on your machine, you had to build that bridge yourself. From scratch. Every time.

Each tool — whether it was Slack, Notion, GitHub, or Google Sheets, came with its own set of rules. That meant spinning up a new custom integration for every single one. No shortcuts. This scattered, one-off approach made it ridiculously tough to scale AI systems across different workflows. Every new task felt like reinventing the wheel. Developers found themselves wrangling fragile code, navigating a maze of niche APIs, and wrestling with clunky plugins that barely had documentation. Integration wasn’t just a challenge — it became a never-ending grind.

What the AI world really needed was something consistent. A shared framework. A way for language models and tools to finally speak the same language. And that’s exactly the problem the Model Context Protocol (MCP) was built to solve.

What Is MCP and Why Does It Matter?

The Model Context Protocol (MCP) is an open-source, model-agnostic framework built to streamline how AI systems connect with the outside world — whether that means tools, files, memory, or external services. You can think of it like a universal adapter: it gives any large language model (LLM) a clear, consistent way to interface with just about anything beyond its own brain.

At its core, MCP handles three big jobs. First, it lays out how AI systems can fetch or access external resources — things like files, documents, or databases. Second, it defines how these systems can trigger tools or actions, such as sending an email or hitting an API endpoint. And third, it manages predefined tasks and workflows, like “summarize this doc” or “draft a reply.”

This kind of structure tackles one of the biggest pain points in AI development. Without MCP, devs have been stuck wiring up custom integrations for every new tool-model combo — a frustrating, time-consuming mess. MCP flips that on its head. With it, developers can either build or tap into an existing MCP-compatible server for a tool, and boom — any AI app that speaks MCP can use it out of the box. No rebuilds. No rewrites. Just plug and play.

How MCP Works: Clients, Servers, and Tools

The Model Context Protocol (MCP) runs on a simple but elegant architecture. At the center of it all is the host — usually an AI assistant or application like Claude Desktop or a smart IDE. This host acts as the brain, connecting out to a network of MCP servers, each one representing a specific tool, service, or data source.

These MCP servers aren’t just passive endpoints. Each one offers up three main types of capabilities:

- Resources: Think of these as bits of information the AI can look at — documents, files, emails, database records, and so on. Importantly, they’re not wide open by default. The user or app explicitly chooses what to share, giving full control over what the AI sees. That means privacy isn’t just an afterthought — it’s built right in.

- Tools: These are the actions the AI can take. For instance, a GitHub server might expose a

get_open_issuestool, while a Slack server could offersend_message. Based on what the user asks, the model figures out which tool to use, and MCP handles the actual call behind the curtain. - Prompts: These are pre-built templates or workflows that guide the AI in performing specific tasks. They’re great for things like summarizing a doc, pulling insights from a dataset, or responding to a knowledge base question — repeatable, structured actions that benefit from a consistent approach.

Now, when the AI wants to do something, it doesn’t just spit out a text command and hope it works. Instead, it makes a well-formed, structured request to the right tool using the MCP client. That request goes to the correct server, which does the job and sends the result back. The whole flow runs on a clean, JSON-based format — making it safer, predictable, and easier to debug when things go sideways.

Real-World Adoption: Who’s Using MCP?

Even though it’s still relatively new, MCP is already starting to make waves out in the real world. Take Claude Desktop, for example — Anthropic uses MCP as its reference client, which means users can hook the Claude model up to their local tools, files, and services with minimal hassle. All it takes is pointing to an MCP server, and suddenly Claude can read through documents, work with files, and access project data — all while keeping user control and privacy firmly intact.

And it’s not just Claude. Other apps are getting in on the action too. The AI-first coding environment Cursor uses MCP to give its assistant access to platforms like GitHub and Slack. Even Microsoft is joining the party — they’ve announced upcoming support for MCP within Azure OpenAI. That’s a big signal: this protocol isn’t just for indie devs and side projects anymore — it’s catching the eye of the big players.

Meanwhile, the open-source scene is moving fast. Developers have already spun up a whole collection of MCP servers for widely used tools like Google Drive, Notion, PostgreSQL, Brave Search, and plenty more. There are even connectors for shell access, vector databases, and a range of cloud services. Thanks to this growing library, developers don’t have to start from scratch every time — they can just plug into what’s already out there and get moving.

MCP vs. LangChain and Function Calling

If you’ve spent any time working with AI agents, you’ve probably asked yourself how MCP stacks up against tools like LangChain or OpenAI’s function calling. So here’s the scoop: MCP isn’t trying to replace them — it’s designed to play nicely alongside them.

Think of it this way: LangChain is great for stitching together sequences of actions and keeping track of the overall flow across an AI interaction. OpenAI’s function calling lets models hand off structured function requests. MCP fits underneath all of that — as the plumbing layer that actually defines how tools get exposed and how those requests get executed.

What’s really cool is how these pieces can work together. LangChain, for instance, can treat MCP as a source of tools. And when you’ve got a model using function calling, it can generate calls that MCP knows how to carry out. It’s all modular. It’s interoperable. And most importantly, it means developers don’t have to rebuild the same tools again and again for every AI framework — they can just plug into the shared interface MCP provides and get going.

Benefits of MCP: Why It’s Gaining Momentum

There’s a reason MCP is catching on fast with AI developers and companies across the board — it just makes life easier.

First off, it cuts through the complexity. Instead of wrestling with tangled integrations, developers can focus on what the AI should do — not how to wire it up to a dozen different APIs or data sources. MCP cleanly separates the intelligence from the infrastructure.

Second, it’s open and flexible. MCP isn’t locked into any one model, vendor, or language. You can use it with Claude, GPT, a custom-tuned model, or whatever AI system you’re building with. That means no vendor lock-in, no rewriting every time you switch models, and no spaghetti code to maintain.

Third, it’s built with security in mind. Nothing is exposed by default. The AI only sees what you explicitly allow it to access. It can’t dig through your files or run commands unless you give the green light. That makes it a natural fit for enterprise environments, where data privacy and compliance aren’t negotiable.

And finally, there’s the community. MCP’s momentum isn’t just coming from big names — it’s being driven by a growing open-source ecosystem. Developers are sharing servers, SDKs, and docs, which means you don’t have to start from scratch. You can build faster, smarter, and more confidently by standing on the shoulders of what’s already out there.

The Future of AI Agents Runs Through MCP

We’re standing at the edge of a major shift in how AI agents actually work. Up until now, even the most advanced models have been stuck in reactive mode — brilliant at answering questions, but blind to the tools, files, and real-world context they’d need to truly get things done. MCP is changing that.

By giving AI systems a standardized way to talk to external tools and data, MCP unlocks a whole new class of applications — ones that don’t just understand what you ask, but know how to act on it. From summarizing documents and filing support tickets, to generating reports or jumping into team chats, AI agents can now plug directly into the workflows you already use.

Of course, we’re not all the way there yet. Models still have room to grow when it comes to choosing the right tools or structuring the perfect arguments. And developers still need to think carefully about how much context to feed in. But the groundwork? It’s here. And it’s rock solid.

If you’re working on AI tools — or just figuring out how to make assistants that are more than clever chatbots — the Model Context Protocol is worth a serious look. It might just be the missing layer between smart models and truly useful, real-world AI.

In our next blog post, we’ll build our own MCP server to give Claude some exciting new capabilities!

References

https://modelcontextprotocol.io/introduction

.avif)

.jpg)