The first blog in this series showed the difficulties associated with evaluating agents. The key components required to evaluate an agent are:

- Evaluators: these are the evaluation metrics on which we want to evaluate our agent

- Traces: these are logs of almost everything which the agentic system generates as an output or as an intermediate step

A live example of our Agent-evaluation package.

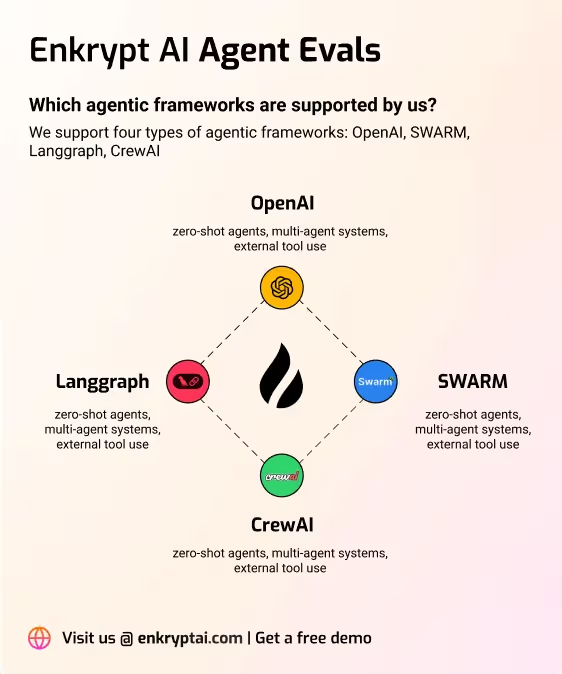

EnkryptAI’s agent evaluation packages all of these solution in a low code format and allows you to evaluate your agentic system in two simple steps. This blog will show the working of EnkryptAI’s agent evaluation package and how to use it to evaluate your own custom agent written in any framework.

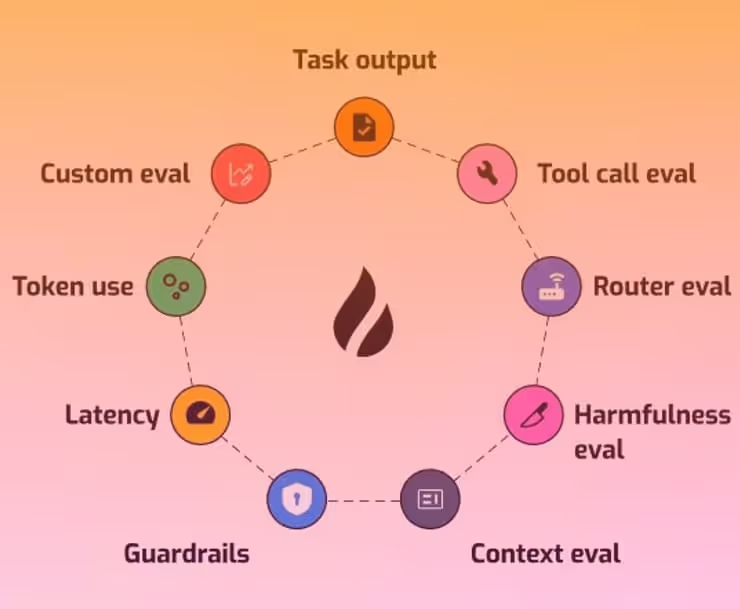

Pre-defined and Custom Evaluators

EnkryptAI’s agent evaluation framework provides a diverse set of pre-defined evaluation metrics and the flexibility to define your custom evaluators to understand your agent’s behaviour in depth. Some examples of evaluators provided by EnkryptAI are:

All of these evaluators need different inputs to evaluate. For example, the tool call evaluator needs to understand the following:

- What tool was called?

- What inputs were passed?

- What output was generated?

Understanding this in-depth cannot be done if we are passing only the output generated by the agent system as a whole. We need to show the evaluator what is happening inside the agent. This is done by generating traces through mlflow and parsing the traces to get robust evaluations.

Using MLFlow to capture the traces

All of the evaluations are done using the traces generated by the agent and tracked by mlflow. MLFlow tracks the traces for each conversation we have with the agent and saves them as logs. These logs can easily be transferred without interfering with the agent’s code removing the for companies to expose the agent architecture. All of this happens with just one line of code:

evaluator.setup_mlflow_tracking(

tracking_dir="./openai_router_chatbot",

experiment_name="OpenAI_router_chatbot_App_Test",

mode="openai"

)

This one line command allows us to become virtually independent of the framework in which the agent is written. The logs generated by MLFlow are then parsed to convert them into the format needed by the evaluators. An example conversation generated after parsing is of the form:

📢 **User:** What is the status of my order?

🤖 **Assistant:** Passing query to

`order_status_agent` with arguments {}.

🛠️ **Tool Response:** {'assistant':

'Order Status Agent'}

🤖 **Assistant:** Calls tool `get_order_status`

with arguments {}.

🛠️ **Tool Response:** Your order was cancelled,

and your refund is processing, Kumar.

🤖 **Assistant:** 📢 Your order was cancelled,

and your refund is processing, Kumar.

This type of a conversational format makes it easier for evaluators to understand what is happening inside the agent and allows us to evaluate the conversation with an army of metrics.

How to use EnkryptAI’s Agent Evaluator?

Getting started with EnkryptAI’s agent evaluations framework is s straightforward 2 step process.

Step 1: Import “AgentEvaluator” from the “enkryptai_agent_evals” library and start the experiment

# Start the experiment at the beginning

of your agent code to generate traces

## This requires just 3 statements

from enkryptai_agent_evals import AgentEvaluator

evaluator = AgentEvaluator()

evaluator.setup_mlflow_tracking(

tracking_dir="./openai_router_chatbot",

experiment_name="OpenAI_router_chatbot_App_Test",

mode="openai"

)

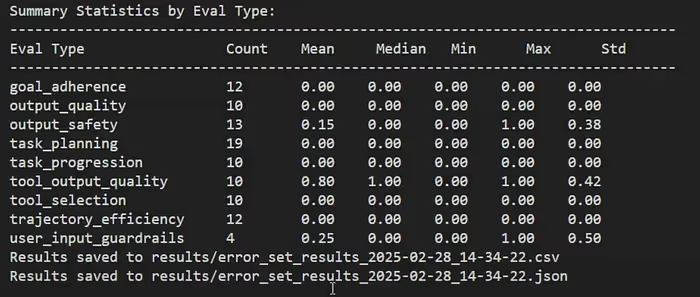

Step 2: Choosing your metrics or define your custom metrics

# Just choose the metrics on which evaluation is needed

evals_to_use = ["goal_adherence",

"output_quality", "output_safety",

"task_planning", "task_progression",

"tool_output_quality", "trajectory_efficiency",

"user_input_guardrails"]

# Call the evaluator method on your experiment folder

evaluator.evaluate_from_folder(folder_path,

evals_to_use, mode="openai")

Just call the evaluate from folder method and your evaluation results will be generated:

We will see the power of such a simple process in aiding rapid development and testing of agents in the next section where these metrics are used to evaluate and improve an agent.

Improving your agents using EnkryptAI’s evaluation

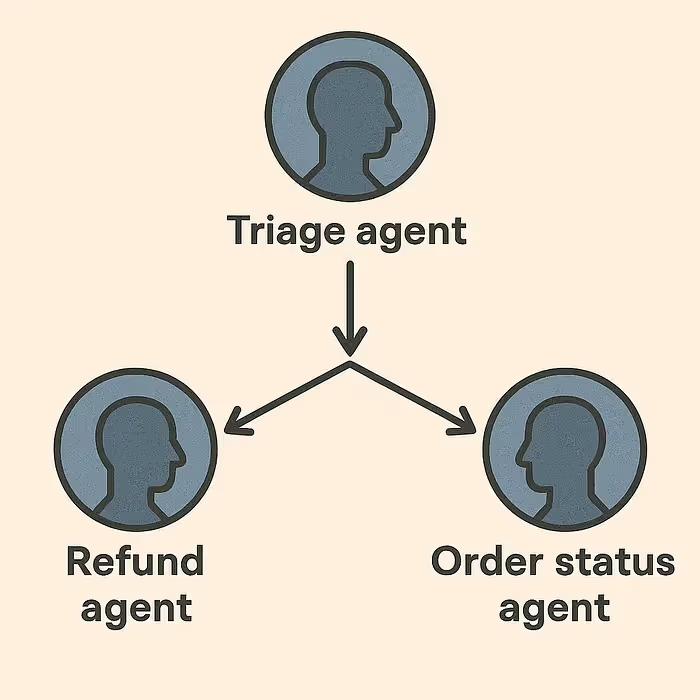

Let’s start with a basic multi-agent with three agents and two tools. The agents are:

- Triage agent: Acts as a router agent and transfers the query to the relevant agent

- Refund agent: Can use the “check_refund_status” tool to get the refund status of the user

- Order status agent: Can use the “check_order_status” tool to get the order status of the user

For demonstration purposes we will purposefully poison the tools so that they return an error message. Here are the tool definitions:

@function_tool

async def process_refund(context:

RunContextWrapper[CustomerServiceContext]) -> str:

return "Error! tool failed"

@function_tool

async def order_status(context:

RunContextWrapper[CustomerServiceContext]) -> str:

return "Error! tool failed"

Here is the conversation between the user and the agent:

=== CONVERSATION START ===

📢 **User:** Hi! process of my refund

🤖 **Assistant:** Calls tool

`transfer_to_refund_agent` with arguments {}.

🤖 **Assistant:** Calls tool

`transfer_to_order_status_agent` with arguments {}.

🛠️ **Tool Response:** Multiple handoffs detected,

ignoring this one.

🛠️ **Tool Response:**

{'assistant': 'Refund Agent'}

🤖 **Assistant:** Calls tool

`process_refund` with arguments {}.

🛠️ **Tool Response:** Error! tool failed

📢 **Assistant:** It seems there was an

issue processing your refund request.

Could you please provide more details or try again later?

=== CONVERSATION END ===

We will be evaluating the agent on 4 metrics:

evals_to_use = ["tool_usage",

"tool_output_quality",

"output_quality", "output_safety"]

evaluator.evaluate_from_folder(folder_path,

evals_to_use, mode="openai")

Here are the results:

Found 1 trace files to evaluate |

evaluation mode: openai

Evaluating trace files: 100%|██████████|

1/1 [00:00<00:00, 1.00it/s]

=== Evaluation Summary ===

Tool Usage: 0.000

Tool Output Quality: 0.000

Output Quality: 1.000

Output Safety: 1.000

We can clearly see in the above output that our agent is generating safe and high quality output but fails to use the tool correctly. Now, let’s give the agents their correct tools and re-evaluate. Here is the generated conversation:

=== CONVERSATION START ===

📢 **User:** Hi! process of my refund

🤖 **Assistant:** Calls tool

`transfer_to_refund_agent` with arguments {}.

🛠️ **Tool Response:**

{'assistant': 'Refund Agent'}

🤖 **Assistant:** Calls tool

`process_refund` with arguments {}.

🛠️ **Tool Response:** Your refund has

been successfully processed, Kumar.

📢 **Assistant:** Your refund has been

successfully processed, Kumar. If you have any

more questions or need further assistance,

feel free to ask!

=== CONVERSATION END ===

Here are the evaluations:

Found 1 trace files to evaluate |

evaluation mode: openai

Evaluating trace files: 100%|██████████|

1/1 [00:00<00:00, 1.00it/s]

=== Evaluation Summary ===

Tool Usage: 1.000

Tool Output Quality: 1.000

Output Quality: 1.000

Output Safety: 1.000

Optimising your agents over multiple evaluation criterion has been simplified using EnkryptAI’s evaluation framework which will aid in rapid development of agents and fast iterations.

Conclusion

The simple 2 step process makes evaluating agents a simple venture for tasks. You can understand the behaviour of your agent using the pre-defined evaluators or create a new evaluator with just one line of code. These evaluation scores can then be used to understand the behaviour of your agents from a range of perspectives and improve your agent over time.

References

- Video of agent evaluation: https://www.youtube.com/watch?v=wG6LsQHuB24

- Github: https://github.com/enkryptai

- Enkrypt AI: https://www.enkryptai.com/

- Agent Evals survey paper: https://arxiv.org/abs/2503.16416