Autonomous AI agents can be revolutionary, but they need to be built with a trust and safety-first approach.

Exploring the Potential and Challenges of AI-Driven Decisions

AI agents are transforming industries, enabling faster, more precise, and scalable decision-making. From personalized learning assistants to intelligent supply chain management, these systems optimize operations, enhance user experiences, and solve complex problems. However, this power comes with responsibilities: ensuring decisions made by AI are ethical, fair, and transparent. Failure to do so can further widen the AI trust gap that already prevails.

In this blog, we’ll explore the real-world applications of AI agents, the challenges they pose, and how businesses can establish trust in autonomous AI systems to unlock their full potential in 2025.

AI Agent Use Cases: Driving Decisions Across Industries

AI agents are already deeply embedded in our lives, driving transformative outcomes across sectors. Here are some notable examples:

- Personalized Learning Assistants

- Use Case: AI-powered tutors adapting to individual learning needs.

- Decisions Made:

- Recommending topics or exercises tailored to the student.

- Providing feedback or encouragement at appropriate times.

- Adjusting difficulty levels for optimal learning.

- Impact: Improves engagement, enhances learning outcomes, and broadens access to quality education.

- Customer Support Automation

- Use Case: AI chatbots and agents managing customer inquiries.

- Decisions Made:

- Classifying queries (e.g., billing vs. technical support).

- Selecting appropriate responses from a knowledge base.

- Escalating complex issues to human agents.

- Impact: Reduces response times, lowers costs, and provides 24/7 support.

- Healthcare Virtual Assistants

- Use Case: AI agents supporting symptom analysis and healthcare management.

- Decisions Made:

- Suggesting possible conditions based on symptoms.

- Recommending actions like consulting a doctor or taking medication.

- Sending follow-up reminders for medication or appointments.

- Impact: Enhances accessibility in remote areas and reduces healthcare professionals' workload.

- Automated Research and Data Analysis

- Use Case: AI systems analyzing large datasets to identify trends and insights.

- Decisions Made:

- Prioritizing relevant data or research papers.

- Highlighting significant patterns or anomalies.

- Summarizing findings for stakeholders.

- Impact: Accelerates research cycles and improves decision-making with data-driven insights.

- Intelligent Supply Chain Management

- Use Case: AI optimizing logistics, inventory, and demand forecasting.

- Decisions Made:

- Adjusting inventory based on demand forecasts.

- Choosing the most efficient delivery routes.

- Responding proactively to supply chain disruptions.

- Impact: Reduces waste, minimizes costs, and improves operational efficiency.

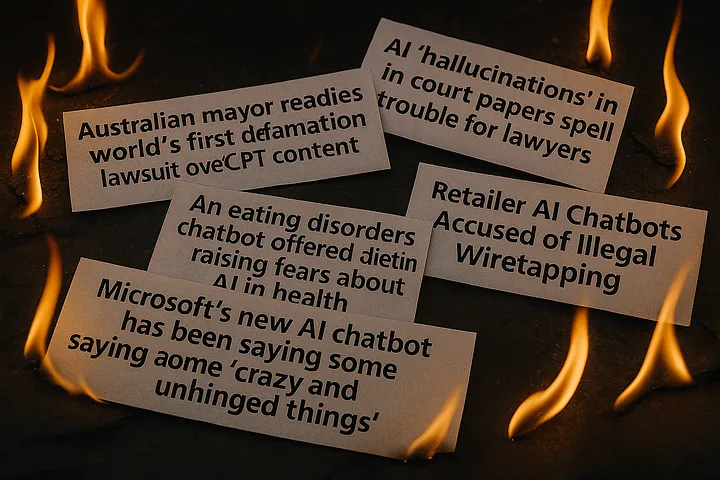

Gaps in AI Trust

AI trust remains a significant challenge for enterprises and consumers alike. According to Forrester, 25% of data leaders cite lack of trust in AI as a major concern, while 21% highlight AI/ML transparency issues.

Consumer skepticism is even higher—only 28% of U.S. online adults trust companies using AI, while 46% don’t, and 52% view AI as a societal threat.

KPMG reports that 61% of people are wary of trusting AI, with healthcare AI being the most trusted and HR applications the least. Notably, emerging economies show higher trust and acceptance of AI compared to developed regions. Addressing this trust gap is essential for organizations leveraging AI for growth.

Top 5 Challenges in AI Decision-Making in 2025: Bridging the AI Trust Gap

While AI agents promise immense benefits, their decision-making processes also present significant challenges:

- Bias and Fairness

AI systems can unintentionally amplify biases in their training data.- Example: A learning assistant might favor topics relevant to certain demographics, disadvantaging others.

- Explain ability and Transparency

Many AI models operate as "black boxes," making decisions difficult to interpret or justify.- Example: Researchers may struggle to validate insights generated by AI systems, impacting trust.

- Data Privacy and Security

Handling sensitive information can lead to data breaches or misuse if safeguards are inadequate.- Example: A healthcare assistant might inadvertently expose patient information.

- Over-Reliance on AI

Excessive dependence on AI can reduce critical thinking and adaptability.- Example: Rigid supply chain systems may fail to adapt to unforeseen disruptions.

- Ethical Dilemmas

AI decisions can prioritize efficiency over ethical considerations.- Example: A supply chain agent may recommend cost-cutting measures that harm the environment.

Establishing Trust in Autonomous AI Agents

To realize the potential of fully autonomous AI agents, organizations must address these challenges head-on. Key strategies for building trust in AI include:

- Prioritizing Safety: Rigorously test AI systems to mitigate risks and prevent harmful outcomes.

- Ensuring Transparency: Use explainable AI techniques to make decision-making processes interpretable and trustworthy.

- Fostering Ethical Practices: Align AI actions with human values, prioritizing fairness, empathy, and environmental sustainability.

- Adopting Continuous Monitoring: Regular audits can help ensure autonomous agents remain aligned with ethical and operational standards.

- Balancing Autonomy and Oversight: Gradually transition from "human-in-the-loop" systems to autonomous agents, maintaining oversight during critical phases.

Conclusion: A Safer, Smarter Future with AI

The next generation of autonomous AI agents has the potential to revolutionize industries and enhance everyday life. However, this leap forward must not come at the expense of trust and safety.

At Enkrypt AI, we are committed to empowering organizations with robust tools for ethical AI development. By prioritizing transparency, fairness, and security, we aim to unlock the full potential of AI while safeguarding its use for future generations.

How will your organization embrace the future of AI? Join us in shaping a responsible and innovative AI-powered world.

FAQs

- How to build greater AI trust in 2025?

Answer: Following are the top 5 ways to build greater AI trust in 2025:- Improve Data Quality

Ensure AI models are trained with accurate, unbiased, and complete data. Regular data reviews and internal testing can help identify and address quality issues. - Strengthen AI Governance

Implement enterprise-wide AI governance frameworks. Establish clear rules and policies to oversee AI models and manage risks proactively. - Involve the Whole Organization

Engage both technical and non-technical teams in AI risk management. Provide workforce training to boost AI literacy and clarify roles and responsibilities. - Create Diverse Teams

Build cross-functional teams, including AI ethics advisory boards, to ensure diverse perspectives guide AI development and deployment. - Define a Clear Purpose

Set clear goals for AI initiatives focused on improving customer experience, speeding up outcomes, and supporting team development through AI-driven learning.

- Improve Data Quality

- Is AI 100% trustworthy?

Answer: AI is not 100% trustworthy due to its inherent unpredictability, lack of transparency, and limited ethical reasoning. Its decision-making often functions as a "black box," making it difficult to explain or predict outcomes. While AI can process vast amounts of data, its lack of human-like ethical norms and dynamic reasoning creates trust challenges. Efforts like human oversight and improved explainability aim to bridge this gap, but achieving complete trust remains a complex task.

- Which are the top AI risks that create AI trust gap?

Answer: Top AI Risks That Create the AI Trust Gap:- Bias: AI can inherit biases from training data, leading to unfair outcomes in hiring, healthcare, and policing.

- Cybersecurity Threats: AI can be exploited for cyberattacks like identity theft, deepfakes, and phishing scams.

- Data Privacy Issues: AI systems often collect personal data without consent, risking privacy breaches.

- Environmental Impact: AI’s high energy consumption contributes to carbon emissions and water usage.

- Existential Risks: Rapid AI advancements could surpass human control, posing long-term existential threats.

- Intellectual Property (IP) Infringement: AI-generated content raises concerns about copyright and ownership disputes.

- Job Displacement: Automation powered by AI may cause job losses, though it can also create new roles in tech.

- Lack of Accountability: Defining responsibility for AI-driven errors remains legally and ethically unclear.

- Transparency Issues: AI’s complex models often operate as "black boxes," limiting explainability and trust.

- Misinformation and Manipulation: AI can spread fake news, deepfakes, and AI-generated disinformation, misleading the public.