Enhancing AI Guardrails with Red Teaming: A Self-Improving Security Cycle for AI Applications

The Problem: AI Can Be Tricked

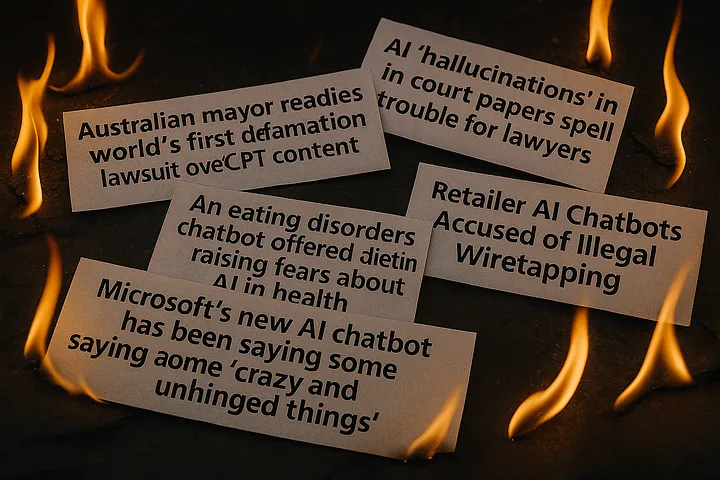

Generative AI models, despite their sophistication, remain vulnerable to manipulation. The manipulation happens in the form of injection attacks where adversaries craft input to force un-intended behavior. Injection attacks lead to extraction of confidential data and steering responses in harmful direction. Static guardrails are not enough to block these attacks as there are always new ways to exploit system weaknesses. Guardrails must evolve continuously to safeguard generative AI applications from evolving attack configurations.

At Enkrypt AI, we use Red Teaming to test and evolve our Guardrails so that they just don’t react to known threats, they anticipate new ones.

Understanding Injection Attacks and the Role of Red Teaming

Injection attacks manipulate an AI’s input mechanisms to subvert intended behaviors. Attackers may:

- Circumvent content restrictions

- Extract hidden system prompts

- Introduce misleading or harmful narratives into responses

Guardrails are designed to mitigate these risks, but traditional testing often fails to capture the complexity of real-world attacks. This is where red teaming becomes essential.

At Enkrypt AI, our red teaming methodology includes:

- Adversarial Simulation: Crafting diverse attack vectors that push AI guardrails to the limit.

- Broad Coverage: Testing across hundreds of risk categories to uncover vulnerabilities others miss.

- Real-World Scenarios: Simulating adversarial behaviors observed in the wild, ensuring comprehensive security evaluation.

How Red Teaming Improves Injection Attack Detection

Red teaming isn’t just about identifying vulnerabilities, it’s about creating a self-improving security loop. See Figure 1. This process enhances AI security in five critical ways:

- Identifying Vulnerabilities

Injection attacks often exploit subtle weaknesses that are not immediately obvious. Red teaming systematically uncovers these blind spots, revealing where guardrails need refinement.

- Enhancing Training Data

Each identified attack vector is an opportunity to improve detection models. The new attack vectors found are integrated into training datasets for our guardrails, making them progressively more robust.

- Improving Detection Accuracy

As a rule, stricter guardrails increase the likelihood of false positives, while less strict ones raise the likelihood of false negatives. Red Teaming helps stress-test these guardrails, ensuring a balance between these extremes. This process ensures that guardrails are precise, adaptive, and effective without unnecessary friction.

- Staying Ahead of Evolving Threats

Cyber attackers do not sleep and are continuously finding new ways to break the system. Red Teaming enables us to anticipate and counteract new ways of attacking before an attacker can.

- Strengthening System Integrity

Beyond detecting attacks, red teaming reinforces the structural integrity of AI models, ensuring that system prompts, configurations, and sensitive data remain protected.

The Feedback Loop: Red Teaming and Guardrails Reinforce Each Other

Security is not a one-time solution—it’s a continuous process. See Figure 2. Red teaming creates a structured feedback loop that enhances AI defenses:

- Red Teaming Identifies Weaknesses

Comprehensive simulations pinpoint gaps in detection models and areas susceptible to adversarial exploits. - Guardrails Are Refined

Insights from red teaming are fed back into Enkrypt AI’s security infrastructure, allowing us to enhance response mechanisms and preempt future risks. - New Guardrails Are Red Teamed

After implementing refinements, updated defenses undergo rigorous red teaming validation, ensuring that new mitigations are effective. - Continuous Security Evolution

With each cycle, AI defenses become stronger, faster, and more adaptive—outpacing adversarial innovations.

Who Is Responsible for Guardrails?

Ensuring AI security is a shared responsibility between developers, organizations, and users.

1. Model Builders

AI developers play a foundational role in embedding security into models:

- Pre-training safeguards: Filtering out harmful patterns before they become embedded.

- Fine-tuning strategies: Adapting models to specific ethical and security requirements.

- Alignment with safety standards: Ensuring AI remains useful while minimizing risks.

2. Model Users & Adopters

Organizations deploying AI systems must implement additional protective layers:

- System prompt security: Structuring AI interactions to prevent manipulation.

- Runtime monitoring: Detecting and mitigating security threats in real time.

At Enkrypt AI, we facilitate this collaboration by providing state-of-the-art guardrails that integrate seamlessly across AI deployment environments.

Why This Self-Improving Cycle Matters

A security framework that remains static quickly becomes obsolete. The interplay between red teaming and adaptive guardrails ensures:

- Proactive Threat Mitigation: Addressing risks before they escalate.

- Agility in Security: Quickly responding to evolving cyber threats.

- Comprehensive Protection: Layering multiple defensive mechanisms for resilience at scale.

- Trust and Transparency: Demonstrating a commitment to continuous AI security improvement.

Conclusion

A strong AI security system is not defined by a single mechanism but by a dynamic, evolving approach. Red teaming provides the rigorous adversarial testing necessary to refine guardrails, ensuring they remain effective against real-world threats.

At Enkrypt AI, we integrate continuous red teaming and adaptive security into every layer of AI protection. This self-improving cycle allows us to build an AI security system that’s agile to withstand the ever-changing threat landscape.

By embracing the continuous evolution of security threats, we ensure that our risk detection and removal platform remain reliable, resilient and ready for changes ahead.

FAQs: Enhancing AI Guardrails with Red Teaming

1. What are injection attacks in AI?

Injection attacks occur when adversaries craft inputs to manipulate AI models into unintended behaviors. These attacks can:

- Bypass content restrictions

- Extract hidden system prompts

- Inject harmful or misleading responses

2. Why do AI security guardrails need to evolve continuously?

Static guardrails become ineffective over time as new attack techniques emerge. A continuously improving security system anticipates evolving threats rather than just reacting to known ones.

3. What is red teaming in AI security?

Red teaming is a proactive security approach where AI systems are tested with adversarial inputs to uncover vulnerabilities. It involves:

- Simulating real-world attacks

- Stress-testing AI defenses across multiple risk categories

- Continuously refining guardrails based on findings

4. How does red teaming improve AI security?

Red teaming enhances security through a feedback loop that:

- Identifies vulnerabilities in AI defenses

- Improves training data with adversarial examples

- Enhances detection accuracy to reduce false positives/negatives

- Anticipates evolving threats before they become widespread

- Strengthens system integrity by protecting system prompts and configurations

5. How do red teaming and guardrails work together?

Red teaming feeds security insights into guardrail improvements, creating a continuous cycle:

- Red teaming identifies weaknesses

- Guardrails are refined based on findings

- New guardrails undergo further red teaming validation

- AI defenses continuously evolve to stay ahead of threats

6. Who is responsible for AI guardrails?

AI security is a shared responsibility:

- Model builders implement pre-training safeguards, fine-tune models, and align with safety standards.

- Model users & adopters secure system prompts, monitor AI behavior, and enforce runtime security.

- AI security platforms like Enkrypt AI provide advanced guardrails to enhance AI safety across deployments.

7. Why is a self-improving AI security cycle important?

A dynamic security approach ensures:

- Proactive threat mitigation by addressing vulnerabilities before they are exploited

- Agility in security to quickly respond to evolving cyber threats

- Comprehensive protection through multi-layered defense mechanisms

- Trust and transparency by demonstrating continuous AI security improvements

8. How does Enkrypt AI implement red teaming?

Enkrypt AI integrates red teaming into its security framework by:

- Conducting adversarial simulations to test AI resilience

- Covering hundreds of risk categories for a comprehensive security evaluation

- Feeding insights from red teaming into continuously improving AI guardrails

9. How can enterprises strengthen AI security using red teaming?

Enterprises can enhance AI security by:

- Incorporating red teaming into AI development and deployment processes

- Using adversarial testing to validate AI robustness

- Continuously updating AI defenses based on real-world threat insights

.avif)