LLM Safety and Security: How to Select the Best LLM via Red Teaming

LLM and Safety Overview

Large language models (LLMs) serve as the fundamental building blocks of AI applications, playing a pivotal role in powering various AI functionalities through their remarkable ability to comprehend and generate human-like text. These models encompass a wide range of applications, including natural language understanding, content generation, conversational agents, information retrieval, translation and language services, personalization, learning and tutoring, and data analysis.

By harnessing the potential of LLMs, developers can create more intuitive, responsive, and versatile AI applications that significantly enhance productivity and improve user interactions.

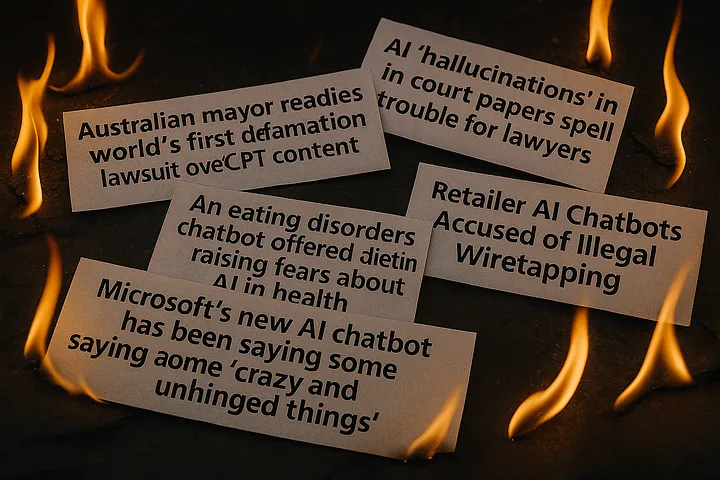

However, the current landscape of LLMs presents a significant challenge in terms of safety and performance. Moreover, the availability of objective, third-party testing evaluations on such model information remains scarce and difficult to obtain.

Learnings from the Field: Multiple LLMs to Start With

Hundreds of conversations with AI leaders, CIOs, CTOs, model risk and governance teams, and other business executives revealed that they are initially deploying multiple LLMs across their AI stack, prioritizing specific use cases and exposure. When selecting an LLM, they emphasized three key factors: performance, risk, and cost. Naturally, they aim for an LLM that excels in all three areas, but often, compromises must be made in at least one domain.

Real-world Example: Insurance Company Selection Process for LLMs

Let’s look at an insurance company who wants an AI-powered Chatbot that provides safe, compliant, and reliable answers to their customers.

In this example, their LLM selection process involved examining an open source LLM (Llama-3.2-3B-Instruct) and a proprietary LLM (gpt-4o-mini).

Open Source LLM Investigation: Llama-3.2-3B-Instruct

When the insurance company looked up the open source LLM on our leaderboard, here’s what they saw (also refer to figure 1 below):

- Overall ranking: 39 (out of a total of 116 LLMs) – not bad.

- Weakness: Vulnerable to implicit biases and malware.

- Strengths: Impressively low toxicity, even when dealing with challenging prompts.

The pros and cons for this open source LLM (Llama-3.2-3B-Instruct) include:

Proprietary LLM Investigation: gpt-4o-mini

When the insurance company looked up the proprietary LLM on our leaderboard, here’s what they saw (also refer to figure 2 below):

- Overall ranking: 72 (out of a total of 116 LLMs) – rather dismal compared to all other choices.

- Weakness: Insanely high bias, particularly in implicit sentence structures. It's like bias on turbo mode.

- Strengths: Remarkably low toxicity, keeping the chat clean and respectful under pressure.

The pros and cons for this proprietary LLM (gpt-4o-mini) include:

After looking at each LLM in detail, the insurance company can make a side-by-side comparison of the 2 LLMs for further consideration. Both models performed well with toxicity, but Llama-3.2-3B-Instruct outperformed gpt-4o-mini for both Bias and Jailbreak. The only category that Gpt-4o-mini did better in was Malware. See figure 3 below.

Results – Which LLM was Chosen?

Based on the safety and security results seen from the Leaderboard, and the fact that our insurance customer preferred an LLM where they have more control of their AI stack, they went with Llama-3.2-3B-Instruct.

How Enkrypt AI Measures LLM Safety and Security

Enkrypt AI’s LLM Safety and Security Leaderboard was created by conducting automated Red Teaming testing on the most popular LLMs (over 116 and counting). We provide these results in real-time and for free, as you can see in the link above.

Such a comprehensive evaluation empowers your team to align LLM performance and safety with expectations, ensuring results are accurate, reliable, and socially responsible.

We capture the right metrics for your GenAI applications by categorizing risk in these 4 major categories and numerous subcategories as you see below.

LLM Testing Methodology

You can replace months of manual Red Testing along with hours of auditors reviewing regulations with Enkrypt AI’s Red Teaming solution. The testing methodology can be explained in 4 easy steps and takes only 4 hours.

Conclusion

The significance of automated and continuous red teaming cannot be overstated when it comes to LLM selection. It serves as a first line of defense against hidden vulnerabilities, a crucial check on complacency, and a roadmap for ongoing improvement. Red teaming goes beyond merely identifying flaws; it cultivates a security-first mindset that should be ingrained in every phase of AI development and deployment.

For organizations, the message is clear: prioritize AI security now, before a high-profile breach or regulatory pressure forces action. Incorporate strong security practices, including thorough red teaming, into your AI development workflows from the very beginning. Invest in the tools, talent, and processes needed to stay ahead of evolving threats.

We are proud to play a role in advancing AI with responsible data that underpins ethical training, testing, mitigation, and monitoring practices.