The Life Sciences Industry and AI Adoption

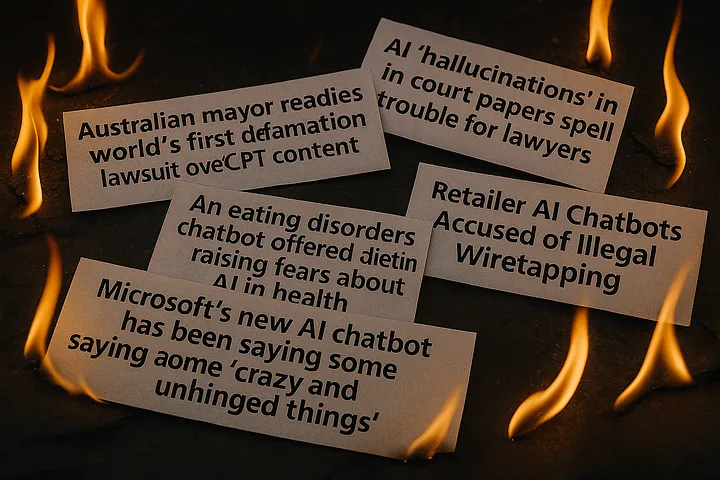

The Life Sciences industry has a wide range of applications that would benefit from the use of AI, including enhancing clinical decision-making to streamlining administrative tasks. While AI holds great promise, it also presents risks. Such risks include sensitive information leakage, inaccurate medical advice, injection attacks for gaining system access, inappropriate language, and hallucinations. Therefore, it’s essential that such risks are detected and removed from any AI application.

What are AI Guardrails? How are They Tailored for Life Sciences?

Enkrypt AI Guardrails offer real time threat detection and response for AI applications that are deployed in production. See Figure 1.

Our Guardrails capabilities can:

- Be configured to identify and mitigate risks in medical AI applications as outlined in the FDA guidelines,

- Ensure compliance with HIPAA and other regulations by safeguarding data privacy, and

- Automate bias detection in generative AI outputs to promote fairness and transparency.

Latest Regulations in Healthcare

On January 6, 2025, Food and Drug Administration (FDA) published draft recommendations for use of artificial intelligence in drugs and biological products. The Department of Health and Human Services (HHS) has also proposed regulations to update HIPAA for addressing the increasing use of Gen AI in healthcare.

The EU AI Act, which was passed in March 2024, establishes a framework for creating safe and secure generative AI applications. Companies in the life sciences must implement AI risk assessment and governance considering these regulatory changes.

FDA Considerations on the Use of AI

The FDA has clearly defined risks associated with the use of generative AI in decision-making for drug and biological product development. Model risk is assessed based on two key factors:

- Model Influence: The AI model’s contribution to the decision relative to other evidence.

- Decision Consequence: The significance of adverse outcomes resulting from incorrect decisions.

In the clinical development, let’s assume that the question of interest to generative AI application is identifying low-risk participants who do not require inpatient monitoring after dosing. The AI model has a high level of influence, as it serves as the sole determinant for monitoring decisions. The consequence of these decisions is also significant, as misclassification could lead to life-threatening adverse events without proper treatment. Given the combination of high influence and high consequence, the overall risk of the decision from generative AI is classified as high.

Proposed Regulations to Modernize HIPAA

The HHS has proposed updates to modernize the HIPAA Security Rule, specifically addressing the use of generative AI in healthcare. Since generative AI has access to sensitive patient information, organizations must handle this data carefully to mitigate cybersecurity risks. The expansion of AI in healthcare also increases the surface area for cyber threats. Companies utilizing AI in life sciences must ensure compliance with HIPAA by implementing:

- Data anonymization to protect patient privacy.

- Secure data storage to prevent breaches.

- Transparent data processing policies to maintain trust and compliance.

EU AI Act: Risk-Based Classification of AI Systems

The EU AI Act categorizes generative AI systems into four risk levels:

- Unacceptable Risk: AI applications that pose severe threats to safety and rights are prohibited.

- High Risk: AI systems impacting fundamental rights, such as medical applications, require rigorous testing and real-time monitoring.

- Limited Risk: Systems with minimal implications require transparency measures.

- Minimal Risk: AI systems with negligible risk require no additional regulation.

High-risk AI systems in healthcare must undergo extensive testing to ensure safety, along with real-time risk mitigation measures.

Out-of-the-Box Life Sciences Risk Testing

Enkrypt AI's Life Sciences dataset (i.e. automated prompts) is designed to assess and mitigate risks associated with AI-driven healthcare applications, ensuring compliance with FDA regulations, HIPPA and EU AI Act.

The dataset enables testing for model risks as defined in latest FDA draft guideline. Additionally, the dataset supports bias detection across various health conditions, identifying disparities based on gender, race, and economic background. It also helps in evaluation of AI-generated medical advice, ensuring accuracy, safety, and ethical alignment. And finally, the dataset accounts for multilingual variations, helping models maintain consistency and reliability across diverse linguistic and cultural contexts. Refer to Figure 2 below.

Life Sciences AI Guardrails from Enkrypt AI

Here at Enkrypt AI, we’ve consolidated these regulatory requirements into a unified AI compliance guardrail. With a single click, organizations can adhere to FDA, HIPAA, and EU AI Act regulations without additional customization.

These purpose-built guardrails are designed to:

- Identify and mitigate risks in medical AI applications as defined in FDA guidelines.

- Ensure compliance with HIPPA and other regulations by maintaining data privacy.

- Automate bias detection in Gen AI Output to ensure fairness and transparency.

By integrating these guardrails, we help organizations minimize AI risks, accelerate AI innovation, and build competitive advantage. Our platform also provides out-of-the-box compliance assessments for NIST, EU AI Act, and OWASP frameworks.

Conclusion

The latest FDA, HIPAA, and EU AI Act guidelines highlight the importance of strong governance tools, making regulatory compliance more important than ever as AI adoption in the life sciences continues to grow. Our Life sciences Risk dataset (i.e. prompts) and Guardrails make this process easier and guarantee that AI applications are safe, compliant, and prepared for deployment as soon as possible.

If you're curious about how your AI system stacks up against international regulatory standards, our platform offers real-time insights at the touch of a button.

Learn more here.