Securing Voice-Based Gen AI Applications Using AI Guardrails

The Rise of Voice AI

Voice is rapidly becoming the preferred mode of communication. The messaging industry has already shifted significantly from text to voice messages, with users increasingly relying on voice-based communication for efficiency and convenience.

AI-based conversational systems are also moving to voice as the primary mode of communication. It hasn’t been too long since Open AI made its splash with a big demo on real time voice support on ChatGPT. Voice-based Generative AI chatbots are transforming industries, powering use cases such as conversational AI agents, voice assistants, and AI-powered IVRs for call centers.

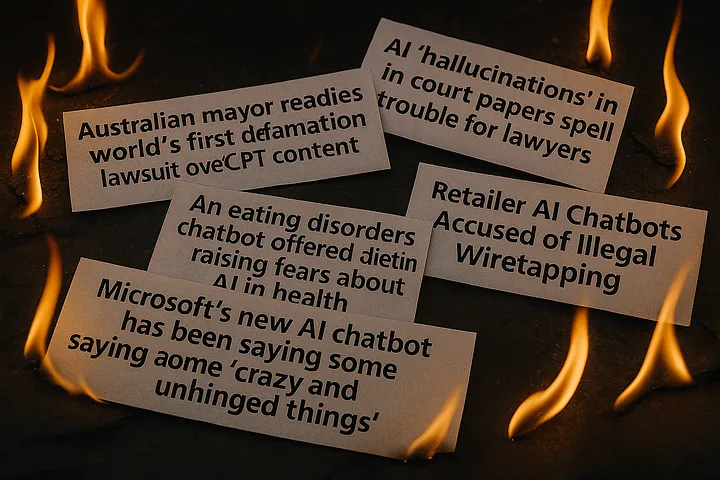

Security Concerns with Voice AI

As conversational systems continue to evolve to voice, it is essential to understand how security must keep pace with the rising adoption of voice AI. Here are a few security concerns below (also refer to Figure 1):

- Voice based prompt injection attacks can trick AI into executing unauthorized commands, such as modifying reservations, canceling transactions, or exposing confidential records.

- Voice-based agents could be misused for off-topic or even inappropriate (NSFW) conversations.

- Voice interfaces are more susceptible to social engineering attacks, where attackers can manipulate AI assistants using tone, phrasing, or urgency to bypass safeguards.

Demo of Voice AI Guardrails: Restaurant Reservation Assistant Use Case

To illustrate how these security measures work in practice, we developed a voice agent for managing restaurant reservations. This prototype highlights how Enkrypt AI Guardrails can prevent misuse, such as:

- Detecting and blocking NSFW content

- Enforcing custom policies, such as cancellation restrictions

- Preventing prompt injection attacks that attempt to manipulate AI responses

- Identifying personally identifiable information (PII) and protected health information (PHI) to prevent unauthorized exposure

Enkrypt AI Guardrails Key Features for Voice AI

Here are some key features of our AI Guardrails product for any Voice AI application (also refer to Figure 2 below):

- Minimal Latency: The guardrails evaluation is optimized for speed, ensuring real-time interactions without noticeable delays.

- Multi-lingual support: The guardrails support multiple languages including European as well as south Asian languages.

- Long Context Support: Capable of processing long continuous speech ensuring that long voice messages are also safe.

- Customizable: Guardrails can be configured to detect custom policy violations for a voice agent.

Conclusion

Voice is emerging as the primary mode of Generative AI interaction for the masses. However, adding voice capability to an AI system increases the risk of jailbreaks and security vulnerabilities. Enkrypt AI’s Voice Guardrails provide robust protection against such threats, ensuring secure and trustworthy AI conversations.

Harness the power of Voice AI with Enkrypt AI safety, security, and compliance platform and contact us today for a demo.

FAQs

Why is security important for voice-based generative AI applications?

These applications handle sensitive user data, which can be exploited if not properly secured. Security ensures user privacy, data integrity, and protection against fraud.

What are the biggest security threats to voice-based AI systems?

Some key threats include voice spoofing (deepfake attacks), adversarial attacks, data breaches, unauthorized access, and privacy violations.

How can we prevent unauthorized access to voice-based AI applications?

Use Enkrypt AI’s Guardrails to prevent attempts from malicious users to gain access.

However, we recommend implementing multi-factor authentication (MFA), biometric verification, and role-based access controls (RBAC) to restrict access to sensitive data and features.

Can voice recognition be used for authentication, and is it secure?

Yes, but it should be combined with other security measures like MFA, as voice cloning and deepfake attacks can bypass voice-based authentication.

How can user voice data be protected?

Encrypt stored and transmitted voice data, use privacy-enhancing technologies (PETs), and comply with data protection regulations like GDPR and CCPA.

Should voice recordings be stored, and if so, how securely?

If storing voice data, it should be encrypted, anonymized where possible, and access should be strictly controlled.

What are the best practices for handling personally identifiable information (PII) in voice-based AI?

Avoid storing PII unless necessary, implement strict access controls, and ensure compliance with relevant data protection laws.

How can we prevent adversarial attacks on AI-generated voice models?

Use Enkrypt AI’s Guardrails to ensure you are secured against prompt injection attacks, misuse for off-topic or inappropriate conversations, and social engineering attacks.

What measures can be taken to prevent voice cloning misuse?

Implement detection mechanisms for synthetic speech, use digital signatures, and enforce ethical AI usage policies.

Are there ways to ensure AI-generated voices are not being misused for fraud?

Enkrypt AI’s Guardrails can detect the intent of the user to conduct fraud. For example, if users ask for tax loopholes in an AI voice applications, answers can be sanitized to provide answers that are in compliance with industry regulations.

What regulations apply to voice-based generative AI applications?

Depending on the location, regulations such as GDPR (Europe), CCPA (California), HIPAA (for healthcare applications in the U.S.), and other local laws may apply.

How can organizations ensure compliance with voice data regulations?

Conduct regular compliance audits, implement data governance policies, and provide transparency in data handling practices.

How can users be informed about AI-generated voice content?

Clearly disclose when AI-generated voice is being used and provide users.

What are the ethical concerns related to voice-based generative AI?

Key concerns include deepfake misuse, misinformation, lack of consent, and bias in AI-generated voice responses.

What monitoring strategies can detect security threats in voice AI systems?

All detected risks in voice AI systems can be seen in real-time using Enkrypt AI’s monitoring dashboards and reports.